Advanced Wizard Guide to Dune SQL and Ethereum Data Analytics

Giving you the frameworks and functions you need to become an archwizard

You should already know basic SQL and how to navigate onchain data in Dune tables. If not, start with the beginners guide:

If you understand one protocol like Uniswap v2, but cannot easily break down other protocols by yourself - you are at the right level for this guide. Advanced analysts are able to quickly break down any protocol because we carry a mental framework of standards as well as a large query/function toolkit.

In this guide, you’ll learn to navigate Ethereum protocol standards and use advanced SQL functions on call traces, time series balance tracking, dex/nft trades, and ABIs.

What is a standard, and why do they matter if I’m an analyst?

Protocols contain a set of smart contracts to efficiently manage a functionality, state, governance, and extensibility. Smart contracts can get infinitely complex, with a single function calling dozens of other contracts. These contract structures evolve over time, with some of the most common patterns becoming standards. Standards are tracked in Ethereum Improvement Proposals (EIPs), creating a set of implementation references (a library of code snippets).

Some examples of patterns that grew into standards:

Factories that create clones (like Uniswap factory creating new pair contracts or Argent creating smart contract wallets) led to optimization with minimal proxies (EIP-1167).

Deposit/withdrawal tokens like WETH, aTokens, and even LP positions like Uni-v2 became token vaults (EIP-4626).

ENS, Cryptopunks, Cryptokitties, and other non-fungible tokens led to today's NFT standard (EIP-721). There have been many improved implementations since, like ERC721A.

The standards above were just implementation agreements (reference) contracts that didn’t require changes to the Ethereum Virtual Machine (EVM). Those are known as ERC standards. Some patterns, like brute forcing a contract deployment at a semi-specific address, led to adding a CREATE2 opcode (EIP-1014) at the EVM level, making it a CORE standard. This is how we can have Opensea deploy Seaport at the same address across all chains (making everyone’s lives easier).

I recommend starting with the main ERC standards and understanding the top example contracts of each one. You can learn these standards in my dashboard covering each of them:

These standards matter because they are commonly used across all protocols. No matter what unique complexities have been added, if it’s a DEX then you know to first look for where the ERC20 tokens are being held for liquidity and to look for some transfer in and out of that liquidity pool. Then you can piece together protocol intricacies from there.

Many patterns, such as oracles and utilization rate curves, have not yet been made into EIP standards. But EIPs aren’t the only place to find standards, you can find them at the protocol level with initiatives like Opensea’s Seaport Improvement Proposals (SIPs) as well.

If you approach protocols with the mentality of “what have they built upon existing patterns,” your life as an analyst will be much easier and more fun.

I’m going to refer to patterns as “standards” for the rest of this article for simplicity's sake.

Spellbook tables and analyst queries are just abstractions of standards.

A standards-first approach also makes understanding spellbook tables and other analysts’ queries easier. Let’s use dex.trades as an example. I have some assumed standards that DEXs must follow most of these patterns to remain composable with the rest of the ecosystem.

Below is an example checklist when I look at a new DEX on the dex.trades table:

standard: You can swap one ERC20 token for another ERC20 token. We represent each swap as a single row in the table.

standard: Liquidity must already be sitting somewhere, likely in some ERC4626-esque vault (where some new token represents the deposited liquidity).

dig into: we like to put the “liquidity contract” that best represents the swapped token “pair” here in the

project_contract_addresscolumn.

standard: Liquidity contracts hold two or more tokens.

dig into: I’ll put

project_contract_addressinto Etherscan and see what tokens the contract holds (if it holds any, some like Balancer v2 use internal balance tracking between pools).

standard: It is common for this liquidity contract to be produced from a factory.

dig into: I’ll then click into the

project_contract_addresscreation tx link and note if there is factory contract. If there is not, then that signals to me that each trading pair might be really complex - or that it only supports a certain kind of token/mechanism.

standard: It is common for the user to call some “router” contract instead of the liquidity contract (ETH → USDC, then USDC → Aave to complete an ETH → Aave swap).

dig into: the top-level contract that was called by some user (wallet signer) is the

tx_to. How many contract calls sit between the first contract called and the actual liquidity contract (project_contract_address)?Is the top-level contract DEX/aggregator related, or is it something more complex like ERC4337?

standard: Different DEXs can be optimized for different types of tokens

dig into: the address of the token that was sold (swapped in) or bought (swapped out) are

token_sold_address&token_bought_addressrespectively.What kind of tokens are usually bought/sold? (rebasing, stable, volatile, etc.).

Where did this token come from? Was it a normal ERC20 or some more special wrapper?

Some tokens have additional logic tied to transfers. I’ll check the transaction logs to see if either token emitted special events (like compound delegation transfers).

standard: Yield farming/rewards standards tied to incentivizing liquidity on pools

dig into: again looking at the

project_contract_addresscreation tx link to see if any other contracts created by the init transaction

As I go through this checklist, I’ll note down interesting transaction examples and tables using my EVM quickstart dashboard.

I’ve also built helper queries like this transaction explorer query that shows you all the tables to query for a given transaction hash:

This standards-first approach to analysis solidifies my understanding of this new DEX in the context of existing DEX standards.

CHALLENGE: Go try out 12 days of Dune to learn Uniswap v2 and then take this above approach with Uniswap v3, Balancer v2, or Curve Regular.

Advanced SQL Functions

With those Ethereum conceptual tips in mind, let’s do some advanced queries and functions. We’ll cover the following topics today:

DuneSQL Specific Functions and Types

Advanced Transformations (values/CSV, pivoting, window functions, cross join, unnest, cumulative summation, forward/backfilling values)

Array Functions

JSON Functions

For this guide, the SQL examples will all be Ethereum focused. Remember that DuneSQL is just a fork of Trino SQL.

You should check out our query optimization checklist if you’re curious about optimizing your queries. Dune also has features like larger engines and materialized views to superpower your queries.

DuneSQL Specific Functions and Types

Dune has added a set of functions and types to make working with blockchain data more accessible and accurate (on top of the existing TrinoSQL functions).

First, get familiar with the varbinary type. Varbinary is any value with a 0x prefix (also called bytes/hex), and it is almost 2x faster to query than varchar. You may run into spellbook tables or uploaded datasets where some 0x value is typed as varchar - in such cases, you should use from_hex(varchar_col) when doing any filters or joins. Some longer varbinary values like function calldata or event log data can then be split into chunks (typically 32 bytes or 64 characters using bytearray_substring) and then transformed to numbers (bytearray_to_uint256 ), strings (from_utf8) , or booleans (0x00=FALSE, 0x01=TRUE). You can see all the bytearray functions with examples in the docs.

You need to convert varbinary to varchar type for string-based functions like CONCAT(), which is common when creating links to some chain explorer. We’ve created functions like get_href() and get_chain_explorer_address() to make this easier - you can find examples here.

The other unique DuneSQL types are unsigned and signed integers (uint256 and int256). These custom implementations can capture values more precisely up to 256 bytes, more than the big integer limit of 64 bytes. Note that uint256 can’t go negative, and both have math operations like division that are not compatible. In those cases, you should cast(uint256_col as double) . You’ll see me do this later when dividing for token decimals.

If you are working with Bitcoin or Solana data, they store a lot of values as base58 encoded. You’ll need to use frombase58(varchar) to convert the values to varbinary, and then you can use all the same operations from above. A good Bitcoin example is identifying Ordinals, and a good Solana example is decoding transfer data.

Advanced Transformations

Values and CSV Uploads

You will run into many situations where you need to use some custom mappings. For example, let’s take my query of all ERC standard deployments. You’ll see I use a VALUES () as table(col1, col2, col3) pattern to define a “table” I can work with as a CTE:

--simplified from actual query

SELECT

*

FROM (

values

(1,'ERC20','Token','Fungible Token'),

(2,'ERC721','Token','Non-Fungible Token'),

(3,'ERC777','Token','Fungible Token (Operator Managed)')

) as v(erc_order,erc_id, erc_type, erc_summary)I could have also uploaded this as a csv instead and queried that as a table, but since it was a really small mapping I did it manually in the query.

Pivoting

Pivoting usually means expanding one column with some distinct categories into many columns (one for each category) or vice versa to collapse many columns into a single one. In the same ERC query above, I first took the count of contract deployments by erc_id and month in a GROUP BY. That gave me about 30 rows per month, one for each ERC. I wanted each ERC to be its own column I could chart, with however many months Ethereum has been live to be the number of rows. To do this, I take a SUM(case when erc_id = 'ERC20' then contracts_deployed else 0 end) for each value of erc_id. Note that DuneSQL (TrinoSQL) does not natively have a pivot function yet to do this dynamically.

I didn’t want to type out the same thing 30 times, so I gave chatgpt an example and asked them to write it for me. You should use chatgpt to write most of your queries once you have figured out the basic logic (or use our Dune LLM wand feature)!

Window Functions

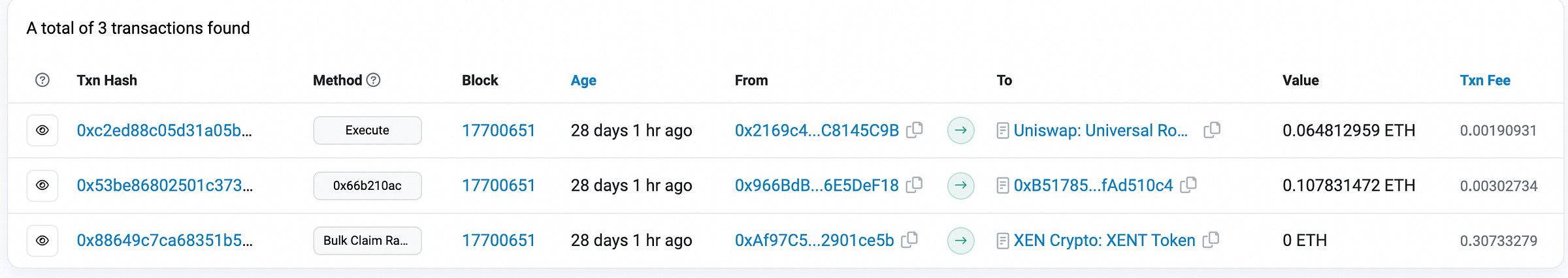

Window functions can be hard to understand at first because it requires you to visualize a table as many distinct tables. These “distinct tables” are split from the main table using a “partition”. Let’s start with a simple example of figuring out what the address of the last call (trace) of each transaction in a block was. I found a block with just three transactions:

If you aren’t familiar with traces, they are the set of all calls that follow the root transaction call. So in that first transaction above to the Uniswap router, the router will call the liquidity contracts, which will then call the token contracts to send the tokens back and forth. There is an array that increments as the calls get chained called trace_address. We want to get the largest trace_address value for each transaction. To do that, we will partition by tx_hash and then assign a counter value to each trace_address in descending order such that the largest trace will have a value of 1. The SQL code looks like this:

SELECT

*

FROM (

SELECT

to

, tx_hash

, trace_address

, row_number() over

(partition by tx_hash order by trace_address desc) as last_trace

FROM ethereum.traces

WHERE block_number = 17700651

)

WHERE last_trace = 1row_number() is a function that can be used on windows, and it just counts up from 1 based on the ordering of the rows. It treats each tx_hash as its own table (window), and then applies the function. One of the traces goes 257 calls long at the first level!

If you run the query without last_trace = 1 you will see the incremental count restarting thrice (once for each transaction). Many different functions can be used with windows, including typical aggregation functions like sum() or approx_percentile(). We’ll use more window functions in the following example.

Sequence, Unnest, Cross Join, Cumulative Sum, Forward/Backfilling Values

These five are essential functions for time series analysis. These concepts also give people the most trouble, so if it doesn’t make sense, go play with the next few subqueries by tweaking different lines.

I’ll showcase these concepts using a query that captures the historical notional and USD balance of ETH, ERC20, and NFT tokens held by an address.

The query begins by calculating the total tokens spent or received in eth transfers, erc20 transfers, nft transfers, and gas spent - if you aren’t already familiar with the tables and logic there, it is essential you take the time to understand those CTEs.

An address may have been active on some days and inactive on others - we will need to create a table to fill in the missing days in the middle to get an accurate view. I date_trunc everything to months because days take a lot longer to compute. We use an unnest when creating all the “months” since the first transfer into an address.

, months AS (

with time_seq as (

SELECT

sequence(

cast((SELECT min(month) FROM total_portfolio) as timestamp)

, date_trunc('month', cast(now() as timestamp))

, interval '1' month) as time

) --there is no FROM here; it's not actually required!

SELECT

times.month

FROM time_seq

LEFT JOIN unnest(time) as times(month) ON true

),sequence will create an array of values from the first input to the second input, with the interval of the third input. I want each array value to be a row, so I used unnest on the “time” column I created and renamed it “month” in the alias. I used a LEFT JOIN ... on true to make each array value appear once, since the time_seq table just had one value. I would have gotten duplicate months if the time_seq table had two row values. This can get hard to keep track of when you unnest more complex structures like JSON too (which we will do at the end of the article).

Now, I not only need to track the balance every month but also the balance of every distinct token (contract address) ever held by the address. This means I need to use a CROSS JOIN, which will take any set of columns and create every possible combination of them.

distinct_assets as (

SELECT DISTINCT asset, asset_address FROM total_portfolio

),

agg_to_month AS (

SELECT

t.month

, t.asset

, t.asset_address

, sum(COALESCE(token_nominal,0)) AS token_nominal_diff

FROM (SELECT * FROM distinct_assets, months) t --CROSS JOIN

LEFT JOIN total_portfolio mpf ON mpf.month = t.month

AND mpf.asset=t.asset AND mpf.asset_address = t.asset_address

group by 1,2,3

)

I track both asset type and asset_address because some contracts can mint both erc20 and NFT tokens. The key here is the SELECT * FROM distinct_assets, months, which takes the three columns of “month”, “asset”, and “asset_address” and creates a table like this:

The subquery above also joined all the balance changes, meaning I can use a cumulative sum over the differences to capture the total balance of any token on any month. This is effectively one way to forward fill values easily. To do a cumulative sum, I use sum() inside of a window function, partitioned by each asset and asset_address, summing upwards starting from the earliest month.

, cumulative_portfolio as (

SELECT

month

, asset

, asset_address

, token_nominal_diff

, SUM(token_nominal_diff) OVER (PARTITION BY asset, asset_address ORDER BY month ASC) AS cum_amt

FROM agg_to_month

ORDER BY month DESC

)

In the last part of the query (after I join in prices from our external API, DEX prices, and NFT trading prices), I want to forward fill and backfill the prices for any days where there weren’t any trades, or we don’t have API data. I use a creative COALESCE, LEAD/LAG, and IGNORE NULLS window function for this. It takes the last (or next) non-null value as the value for a specific month if that month does not already have a price.

, filled_portfolio_prices as (

SELECT

*

, coalesce(price, lag(price, 1) IGNORE NULLS OVER (PARTITION BY asset, asset_address ORDER BY month asc)) as forward_price

, coalesce(price, lead(price, 1) IGNORE NULLS OVER (PARTITION BY asset, asset_address ORDER BY month asc)) as backward_price

FROM portfolio_prices

)

SELECT

*

, cum_amt*COALESCE(price, forward_price, backward_price) as cum_amt_usd

FROM filled_portfolio_prices

The query is pretty dense. Go and run each CTE step one by one and tweak things along the way if you want to understand what is going on! To work with these functions well, you need to get good at visualizing mentally what each CTE table looks like without having to run it.

Array Functions

Arrays are a list of values of the same type, with the index starting from 1. You can create an array like this array[1,2,3]. But more commonly, you’ll be creating arrays during aggregations. Below is a query that takes the smartest NFT traders and aggregates their total volume and collections traded in the last 30 days.

SELECT

COALESCE(buyer,seller) as trader

, array_agg(distinct collection) as collections --array aggregation

, sum(amount_usd) as usd_traded

, count(*) as total_trades

FROM nft.trades

WHERE blockchain = 'ethereum'

AND block_time > now() - interval '1' month

AND

(

buyer IN (SELECT address FROM labels.nft_smart_trader_roi_eth WHERE name = 'Top 1% Smart NFT Trader (ROI Realized in ETH w filters)')

OR seller IN (SELECT address FROM labels.nft_smart_trader_roi_eth WHERE name = 'Top 1% Smart NFT Trader (ROI Realized in ETH w filters)')

)

group by 1

order by sum(amount_usd) desc

There are also quite a few functions you can apply to arrays too. You can use cardinality to get the length of the array, and also contains or contains_sequence to check the existence of values in an array. Let’s say we wanted to determine what swap routes most commonly went through the WETH-USDC pair in Uniswap v2. We could query all Uniswap routers for the path of the swap (an array of ERC20 token addresses) where the path length is at least three tokens long, and either goes WETH → USDC or USDC → WETH in the middle.

--router swap union and getting the two tokens of a pair are earlier in query

select

p.*

, s.path[1] as first_token

, s.path[cardinality(path)] as last_token

, s.call_tx_hash as tx_hash

, s.path as full_path

from all_router_swaps s

LEFT JOIN pair_tokens p on true

where cardinality(s.path) > 2 --at least 3 tokens long in path

and (

contains_sequence(s.path, array[p.token0, p.token1])

OR contains_sequence(path, array[p.token1, p.token0])

)

We can see that the WETH/HEX swap routes through WETH-USDC pair the most often. Here’s a full video explanation of this query if you’re curious.

There are some advanced array functions called “lambda functions”, which are useful for more running complex logic across array values. I’ll cover an example in the JSON section. If you’ve used python/pandas, then these are the “df.apply()” equivalents.

JSON Functions

JSON allows you to combine different types of variables into one nested structure. For getting values out of JSON (there are many additional parameters can be used to control path logic, handling of missing data, and error behavior, which apply to all of these):

json_query(varchar_col, 'strict $.key1.key2'):This function extracts data from a JSON column based on a given path.

It retains the extracted data as a JSON structure in the result, but the type is still varchar.

json_value(varchar_col, 'strict $.key1.key2'):It returns the extracted data as a single value like text, number, or array. It will not return JSON structures.

If you expect a value but it's not returned, consider using

json_queryinstead.

json_extract_scalar(json_col, '$.key1.key2'):same as

json_value, but works when the column is already a JSON type. Confusing, butjson_queryandjson_valuedon’t work on JSON types.

For creating JSON type columns/values:

json_parseis used to convert a JSON-formatted string into a JSON type.json_objectconstructs a JSON object from specified key-value pairs.

The most well-known example of a JSON type from Ethereum is the application binary interface (ABI) that defines all the functions, events, errors, and more for a contract. Here is the transferFrom() portion of the ABI for an ERC20 token:

{

"constant": false,

"inputs": [

{

"name": "_from",

"type": "address"

},

{

"name": "_to",

"type": "address"

},

{

"name": "_value",

"type": "uint256"

}

],

"name": "transferFrom",

"outputs": [

{

"name": "",

"type": "bool"

}

],

"payable": false,

"stateMutability": "nonpayable",

"type": "function"

},

I created a query that lets you easily see all the inputs and outputs of functions from a contract, using the ABI. The “abi” column of “ethereum.contracts” is a JSON array (stored as an array(row())) .

We need to extract inputs/outputs from each value. To do this, I first UNNEST the “abi”, and then I use json_value to get the function name and state mutability. Functions can have multiple inputs and outputs, so I create a new JSON array by extracting the array of inputs[*] . Remember that even though json_query returns a JSON structure, the type is varchar so I need to json_parse it before casting it to JSON array array(row()) .

SELECT

distinct

json_value(functions, 'strict $.name') as signature

, json_value(functions, 'strict $.stateMutability') as function_type_raw

, name

, namespace

, cast(json_parse(json_query(functions,'lax $.inputs[*]' with array wrapper)) as array(row(name varchar, type varchar))) as inputs

, cast(json_parse(json_query(functions,'lax $.outputs[*]' with array wrapper)) as array(row(name varchar, type varchar))) as outputs

, functions

FROM (

SELECT

*

FROM (

SELECT

name

, namespace

, abi

FROM ethereum.contracts

WHERE address = {{contract}}

) a, unnest(abi) as abi_un(functions)

) b

WHERE functions LIKE '%"type":"function"%'

After a few more lines (that I’ve left out above) to cleanup and aggregating all inputs/outputs into a single row per function, we get this:

Now, remember the lambda functions for arrays I mentioned earlier? Let’s use them here. I’m going to filter out view and pure functions from the ABI, and create an array on only the function names. Lambda functions will iterate through each value of the array and apply a certain logic. So first I use filter to only keep functions that satisfy the conditions, and then I put that filtered array through a transform which takes each function ABI json and returns only the “name”. “x” just represents the value of the array, and the portion after the “->” is the function I’m applying to “x”. I also use a window function to only keep the most recently submitted ABI based on the created_at column. The full SQL query for modify functions of contracts with the seaport namespace looks like this:

SELECT

*

FROM (

SELECT

namespace

, name

, transform(

filter(abi, x->json_value(x, 'strict $.stateMutability') NOT IN ('pure', 'view') AND json_value(x, 'strict $.type') = 'function')

, x->json_value(x, 'strict $.name')

)

as modify_function_names

, created_at

, row_number() over (partition by name, namespace order by created_at desc) as decode_order

FROM ethereum.contracts

WHERE namespace = 'seaport'

)

WHERE decode_order = 1CHALLENGE: Want to really test your knowledge of every concept in this guide? Try and reverse engineer that transaction explorer query I referenced earlier on. I use a ton of little tricks in there. :)

Congrats on becoming an Archwizard!

Take your time digesting and practicing these concepts, make sure to fork and play around with each query. Just reading does not count as mastering! You can get really creative in queries with all the standards, types, transformations, and functions you have learned.

Once everything in this article feels second nature to you, then I can comfortably say you are in the top 1% of data wizards on Ethereum. After that you should focus more on building your soft skills like navigating communities, asking great questions, and telling compelling stories. Or go and study up statistics and machine learning - they will become more relevant in the web3 space likely within six months or so.

If you’ve found this guide helpful, go refer the newsletter to a friend or share it on Twitter ❤️

Your content ended up being my only source of learning (apart from practicing and some initial knowledge on the EVM and smart contracts).

Thank you for doing this

I do not know where to share this, so I do this here instead

I've been being in Crypto back and forth for 2 years, then recently I've decided to jump into Web3Data, such a complex concept that I have little, maybe no knowledge about

Dune Analytics educational content, especially from you and Jackie, has been the starting point for me. All your web3-related contents are in my library, though sometimes there's challenges as I have no background as a developer whatsoever, I can always figure things out with the so-called "library" of mine

Do not know really where's next, which way I am heading towards in this field, but I've learnt a lot and studied a lot

So, all in all, just wanna express my appreciation and my thanks to you. And please, keep up the good work, sir